The conference for video devs

Pack your bags, renew your passports; for the first time, Demuxed is coming to London!

October 29th & 30th

BFI Southbank, London, UK

Want to sponsor? Get in touch

Want to sponsor? Get in touch

Join our mailing list

Join our mailing list

Venue & location

Belvedere Rd, London SE1 SXT

We're hosting Demuxed 2025 at BFI Southbank, which is basically the perfect venue for a bunch of video nerds to gather and argue about codecs. Located right on the Thames with those iconic brutalist concrete vibes, it's been the home of serious film culture since the 1950s.

The BFI has been preserving and celebrating moving images for decades, so there's something beautifully fitting about bringing the Demuxed community to a place that's spent so much time thinking about how to keep video alive for future generations. Plus, the location puts you right in the heart of London's South Bank, with proper pubs and decent coffee within walking distance.

Speakers

Chaitanya Bandikatla

TikTok

Talk Overview

Christian Pillsbury

Mux

Talk Overview

David Payr

Mediabunny

Talk Overview

David Springall

Yospace

Talk Overview

Farzad Tashtarian

University of Klagenfurt

Talk Overview

Hadi Amirpour

University of Klagenfurt

Talk Overview

Henry McIntyre

Vimeo

Talk Overview

Joanna White

British Film Institute National Archive

Talk Overview

Joey Parrish

Talk Overview

Jonathan Lott

AudioShake

Talk Overview

Jordi Cenzano

Meta

Talk Overview

Julien Zouein

Trinity College Dublin

Talk Overview

Karl Shaffer

Talk Overview

Luke Curley

hang.live

Talk Overview

Matt McClure

Demuxed

Talk Overview

Mattias Buelens

Dolby Laboratories

Talk Overview

Nicolas Levy

Qualabs

Talk Overview

Nigel Megitt

BBC

Talk Overview

Oli Lisher

Talk Overview

Olivier Cortambert

Yospace

Talk Overview

Pedro Tavanez

Touchstream

Talk Overview

Prachi Sharma

JioHotstar

Talk Overview

Rafal Leszko

Livepeer

Talk Overview

Ryan Bickhart

Amazon IVS

Talk Overview

Sam Bhattacharyya

Katana

Talk Overview

Steve Heffernan

Mux

Talk Overview

Ted Meyer

Talk Overview

Thasso Griebel

Castlabs

Talk Overview

Thomas Davies

Visionular

Talk Overview

Tomas Kvasnicka

CDN77

Talk Overview

Vittorio Giovara

Vimeo

Talk Overview

Zac Shenker

Fastly

Talk Overview

Zoe Liu

Visionular

Talk Overview

Chaitanya Bandikatla

TikTok

Tomas Kvasnicka

CDN77

Improving Video Delivery and Streaming Performance with a "Media-First" Approach for CMAF

In our ongoing effort to enhance live streaming efficiency and viewer experience, we at TikTok partnered with CDN77 to explore a new approach called “Media First” This talk will examine how Media First transforms segment-based video delivery by merging multiple CMAF chunks into unified media objects and leveraging intelligent CDN edge logic. By combining media segments and applying dynamic URL rewriting at the edge, the CDN ensures that each viewer receives the freshest and most cacheable version of the video, even under real-time constraints. By shifting cache logic from “manifest-first” to “media-first,” we observed significant improvements in cache hit ratio, startup time, and stream stability. Specifically, we reduced origin fetches during peak events, improved playback startup predictability across diverse network conditions, and achieved a 2.5% reduction in first-frame delay and a 9% reduction in stalling. This talk highlights the architectural and operational collaboration between TikTok and CDN77 engineering teams, including lessons learned from edge compute deployments, manifest adaptation, and adaptive delivery strategies. Key Takeaways: * Media First Architecture: How merging segment groups and rethinking cache hierarchy enables higher media availability and CDN efficiency. * Edge URL Rewriting: How real-time request rewriting delivers the most current media while minimizing origin MISS traffic. * Performance Gains: Insights into how Media First improves QoE metrics, including startup latency, resolution stability, and playback resilience. * Cross-Team Innovation: Lessons from collaborative development between application logic and CDN infrastructure to advance live streaming capabilities. This talk will be co-presented by engineers from both companies and will include real-world production metrics and implementation details.

Christian Pillsbury

Mux

The Great Divorce: How Modern Streaming Broke Its Marriage with HTTP

For over a decade, HTTP Adaptive Streaming was the poster child for how standards should work together. HLS, DASH, and their predecessors succeeded precisely because they embraced HTTP's caching, took advantage of CDNs, and worked within browser security models. But here's the inconvenient truth: many of our newest streaming "innovations" are systematically breaking this symbiotic relationship. Multi-CDN content steering requires parameterizing URLs (for both media requests and content steering polling) and client/server contracts that inherently and literally remove browser cache from the equation. CMCD v2 abandons JSON sidecar payloads for either query parameters that cache-bust every request or custom headers that trigger double the network requests due to CORS preflight checks. Signed URLs with rotating tokens make browser caching impossible. We've become so focused on solving today's edge cases that we're undermining the fundamental advantages that made HTTP streaming successful in the first place. In this session, you'll discover how seemingly innocent streaming standards are creating a hidden performance tax of 2x network requests, cache invalidation, and resource waste. You'll learn why CORS preflight requests and RFC 9111 cache key requirements are putting streaming engineers in impossible positions, forcing them to choose between security and performance. Most importantly, you'll walk away with practical strategies using service workers and cache API to work around these issues client-side, plus concrete recommendations for how we can evolve both streaming and web standards to work together instead of against each other. Because the future of streaming isn't about bypassing the web—it's about making the web work better for streaming.

David Payr

Mediabunny

Performant and accessible client-side media processing with Mediabunny

Traditionally, web apps that needed media processing had to choose between server-side pipelines, massive FFmpeg WASM builds, or work with the limited set of HTML5 media APIs. These solutions were often slow, expensive, or too inflexible for more complex use cases. WebCodecs changed the game by exposing direct access to the browser’s media pipeline, making us dream about what the web could deliver: powerful, super-fast video editors, DAWs, recorders, converters, all running entirely on-device. But if you've used WebCodecs, you'll know it's low-level, somewhat advanced, and only one piece of the puzzle. To build serious media apps, you need much more. Enter Mediabunny, a new library purpose-built from scratch for the web to be a full toolkit for client-side media processing. It unifies muxing, demuxing, encoding, decoding, converting and streaming under a single API and embraces the web as a platform. I'll share the origin story of how a 3D platformer game accidentally pulled me into media processing, and how lessons learned from my previous libraries mp4-muxer and webm-muxer shaped Mediabunny's final design. We'll briefly explore its architecture and show a demo, and discuss how it can power a new generation of highly performant web-based media tools.

David Springall

Yospace

Olivier Cortambert

Yospace

Another talk about ads, but this time what if we talked about the actual ads??

In 2019, the Interactive Advertising Bureau (IAB) released the Secure Interactive Media Interface Definition (SIMID). This format allows Publishers/Advertisers to have a player display an Ad on top or alongside their stream. Two years later, Apple engineers released HLS Interstitial and that’s how Server Guided Ad Insertion (SGAI) was born. Fast forward to 2025, DASH is getting support for SGAI and SGAI is trending. And one of the reasons it is trending is that it allows players to “easily” display new ad formats - in the form of an ad on top of or alongside the stream. Now, we are seeing demos with streams having fancy ads that fade in/out, play alongside content in the corner or take an L-shape part of the screen. But no one is telling you how you sell this space, how you signal a side-by-side ad, what type of media formats the ads need to be etc… Actually, it’s not that no-one talks about it, it’s that no-one in the streaming industry talks about it, but the ad folks (ie. the IAB) are actually working on it. And, *spoiler alert*, they talk about SIMID not SGAI. What if we (the streaming tech world) actually talked to our customers (the ad ecosystem)? What if we actually focused on what really matters: better ads for a better viewer experience? In this talk, we will go through the different cognate words that make it hard for us to understand ad people. We will of course have a couple of words from our marketing friends who are great at finding words that make everything look 100x better. Can you guess what linear means for an ad guy? Or, what’s the difference between an interactive and an immersive ad? (hint: it’s not Virtual Reality!). And what about live rewind ads - how cool do they actually sound in the ad world? We will also review the different standards bodies: on one side, the IAB (for the ads); on the other, Apple, SVTA, CTA-WAVE (for the streaming side). Have you ever met an ad person at one of your Streaming Tech Talks? I don’t mean someone that talks about SSAI or SGAI, but someone that talks about inventory, supply, and demand. Have you ever thought about fill-rates, CPMs, and what affects those metrics? We will look at what we can do to help the ad ecosystem maximise every advertising opportunity, so that generating more revenue does not have to mean more ads on more parts of the screen every time. Finally, we will talk about the trendy ad formats that are floating around and all the challenges they bring (but from an ad tech side, not a streaming tech side). This is going to be the type of talk where we all have an opinion and we all know the challenges (SGAI adoption, device capabilities, player complexity). But it’s time to get out of our bubble and think of the challenges that the ad ecosystem faces and how we can help. How do we avoid showing a cropped ad or worse cropping the most important of the original stream? May our shared love for XML (VAST/DASH) will lead us to a higher fill rate!

Farzad Tashtarian

University of Klagenfurt

Beyond Captions: A Practical Pipeline for Live AI-Generated Sign Language Avatars

We all treat closed captions as the baseline for accessibility, but for millions of users, they're not enough. Sign languages possess unique grammar, emotion, and context that plain text simply can't capture. While human interpreters are the gold standard, they don't scale for the massive world of live streaming. So, how can we do better? This talk chronicles our journey to build a system that renders expressive, real-time sign language avatars directly into a live HTTP Adaptive Streaming (HAS) feed. We broke this enormous challenge down into a four stage AI pipeline: Audio-to-Text, Text-to-Gloss (the lexical components of sign language), Gloss-to-Pose, and finally Pose-to-Avatar. But the real engineering puzzle wasn't just the AI models, it was where to run them. Every decision in a distributed system is a trade-off. This talk is a deep dive into the system architecture and those tough decisions. We'll explore questions like: - Should Audio-to-Text run in the cloud for maximum accuracy, or can the edge handle it to reduce latency? - How can we use edge servers to handle Text-to-Gloss translation to support regional sign language dialects? - What are the performance-versus-personalization trade-offs of offloading pose generation and avatar rendering to the client device? This isn't an AI-magic talk. It's a practical look at the system level challenges of integrating a complex, real-time AI workload into the video pipelines we work with every day. Attendees will leave with a framework for thinking about how to build and deploy novel, real-time accessibility features, balancing latency, scalability, and user experience.

Hadi Amirpour

University of Klagenfurt

Your Player is Smarter Than You Think: Self-Learning in Adaptive Streaming

Bitrate ladders are getting smarter—and it’s not just about resolution anymore. With modern streaming spanning everything from underpowered devices to GPU-rich endpoints, it’s time for bitrate ladders to adapt to the capabilities of the client, not just the content. Enter DeepStream: a scalable, per-title encoding approach that delivers base layers for legacy CPU-only playback and enhancement layers for GPU-enabled clients, powered by lightweight, super-resolution models fine-tuned to the content itself. DeepCABAC keeps these enhancement networks compact, and smart reuse of scene and frame similarities slashes the cost of training. But training every frame of a video? That doesn’t scale. To make deep video enhancement practical, Efficient Patch Sampling (EPS) pinpoints just the most information-rich regions of each video using fast DCT-based complexity scoring. The result? Up to 83% faster training with virtually no quality loss—saving compute without compromising QoE. And here's where it gets really exciting: during playback, the client starts learning. When a resolution switch happens, previously downloaded high-res segments are downsampled and used to locally train or fine-tune super-resolution models in real-time—right on the device. No extra bits, no cloud-side retraining. Just smarter, self-improving video playback that adapts to network conditions, device power, and content complexity on the fly. It’s a glimpse into the future of adaptive streaming—intelligent, efficient, and personal.

Henry McIntyre

Vimeo

Silvertone Streamer: Give me your quirks; for they are my features

If we don’t understand the problems of our past, how can we create the problems of our future? I grew up "seeing myself on tv". My father, a CBS veteran, put a mirror in place of the tube on a 1950s Silvertone CRT TV. I have since inherited this heirloom and was looking for a way to connect with my dad’s analog video world. I realized the only thing I knew about these old TVs were all the things that could go wrong with them. It turns out the why behind the weird is just as fascinating as you’d hope. In this talk we will update this classic television with an LCD screen, a whole lot of custom fabrication, and live streams from the freakin’ internet… all while preserving the look and literal feel of the original. Join me in exploring what we can learn by resurrecting the weird and wonderful quirks of standards loved and standards lost, using the very technology that replaced them. Lets get UTT (under the top): The mirror is replaced with an LCD monitor, and the original TV knobs are put back to work using custom-designed and 3D printed mounts and adapters. A microcontroller reads voltage information from fresh analog hardware and passes it along to a NodeJs server, then via websocket to a static web page. The channel knob is a gloriously clunky 10-pole rotary switch voltage divider that changes live stream sources in HLS.js. Four "feature" knobs, using standard lamp switches, let us toggle classic CRT quirks using Three.js shaders: grayscale mode, scanlines, barrel distortion with a vignette effect, and horizontal/vertical hold drift. Each of these long lost “bugs” offers an opportunity to learn and recreate electron gun physics, magnetic coil drift, and above all else: NTSC standards. Come reflect with me won’t you? Those problems of tomorrow aren’t going to create themselves.

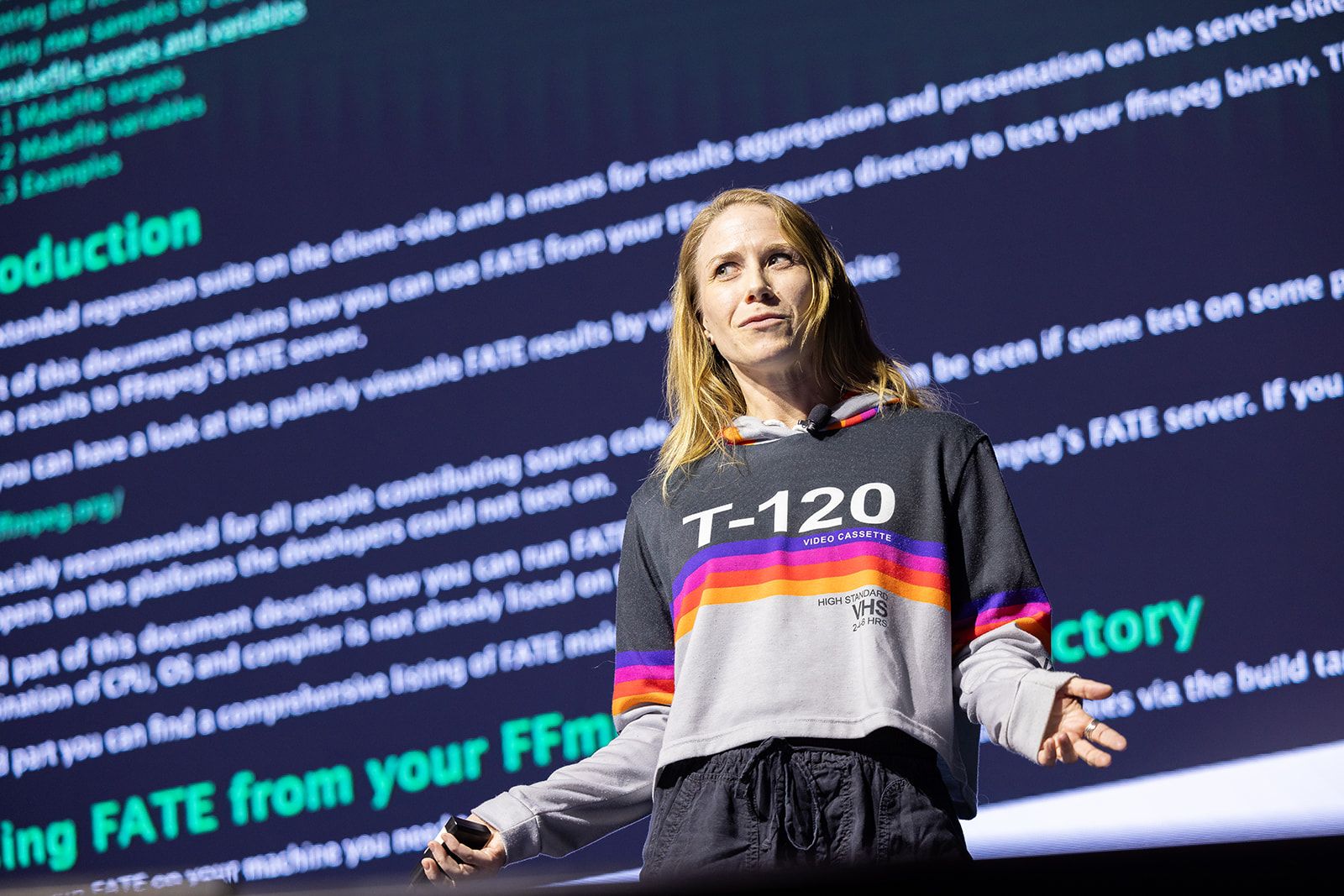

Joanna White

British Film Institute National Archive

FFmpeg's starring role at the BFI National Archive

Ever wondered what happens when 100-year old celluloid cinema film meets digital workflows? Come and hear about the BFI National Archive, where FFmpeg is more than just a tool—it's a vital force in safeguarding Britain's audiovisual heritage. Witness how we seamlessly compress multi-terabyte film frame image scans into preservation video formats, and discover how open source libraries power our National Television Archive's live off-air recording system, capturing an impressive 1TB of British ‘telly’ per day. We’ll look at how vintage hardware digitises broadcast videotape collections, feeding them into automated slicing and transcoding pipelines, with FFmpeg at the heart of our open-source toolkit. This essential technology keeps cultural heritage alive and accessible across the UK through platforms like the BFI Player, UK libraries, and the BFI's Mediatheque. Archives internationally have a growing network of developers working on solutions to challenges like format obsolescence by developing and encoding to open standards like FFV1 and Matroska! With high volumes of digitised and born-digital content flowing into global archives daily, the BFI National Archive embrace the spirit of open-source by sharing our codebase, enabling other archives to learn from our practices. As the Developer who builds many of these workflows, I’m eager to share the code that drives our transcoding processes. I’m passionate about showcasing our work at conferences and celebrating the contributions of open-source developers, like attendees at Demuxed.

Joey Parrish

Streaming Video on 80's Gaming Hardware

What happens when you try to build a video streaming platform using hardware that predates the World Wide Web? This talk explores an ambitious project that transforms the humble Sega Mega Drive / Sega Genesis into a full streaming video player. From coaxing performance out of 16-bit sprite hardware, to custom video encoding, to surprising compression schemes, to some very ugly hardware hacking, we finally achieve 240p at 10fps with 8-bit audio. Come see a complete streaming player inside 64KB of RAM!

Jonathan Lott

AudioShake

Using AI Audio Separation to Transform A/V Workflows

In video production, audio often plays a supporting role, yet its quality profoundly impacts viewer engagement, distribution, accessibility, and content compliance. Traditional workflows struggle with mixed audio tracks, bleed, and ambient noise, making tasks like isolating and boosting dialogue, removing background noise, or extracting music for rights management cumbersome. This session introduces how AI-powered audio separation can transform A/V workflows. By deconstructing complex audio into distinct components—dialogue, music, effects, and ambient sounds—creators can: - Enhance clarity by isolating and boosting dialogue - Remove or replace music to ensure copyright compliance - Reduce background noise for cleaner sound - Create immersive environments through spatial audio design We'll discuss the technical challenges of source separation, share insights on training models to distinguish audio elements in noisy environments, and demonstrate real-world applications that improve accessibility, localization, enhancement, immersive content, copyright compliance, and content personalization. This talk may be of interest to video devs working in environments such as broadcast, XR, UGC, streaming, post-production, or content creation (traditional or AI).

Jordi Cenzano

Meta

The media processing pipelines behind AI

In this talk we will be talking about the complex media processing pipelines behind media AI models (translations, lipsyncing, text to video, etc). AI Models are very picky on media specs (resolutions, frame rates, sample rates, colorspace, etc), also most of the times the AI tools that you see that, for instance, generates a fantastic and sharp video from a text prompt are really based on several AI models working in conjunction, each of them with their own constraints. Our team is responsible for ingesting 1 BILLION media assets DAYLY, and delivering 1TRILLION views every 24h, for that we use (highly optimized) typical media processing pipelines. In this talk we will explain how we leveraged all of that experience, and building blocks, and we added media AI inference as another offering of those pipelines, now you can upload an asset and deliver it with ABR encodings + CDN, and ALSO alter the content of that via AI (ex: add a hat to all the dogs in the scene). And all of that trying to NOT break the bank (GPU time is really expensive) We think this talk could be useful to reveal the hidden complexities of delivering AI, specially at scale

Julien Zouein

Trinity College Dublin

Reconstructing 3D from Compressed Video: An AV1-Based SfM Pipeline

We present an AV1-based Structure from Motion Pipeline repurposing AV1 bitstreams metadata to generate correspondences for 3D reconstruction. The process of creating 3D models from 2D images, known as Structure from Motion (SfM), traditionally relies on finding and matching distinctive features (keypoints) across multiple images. This feature extraction and matching process is computationally intensive, requiring significant processing power and time. We can actually bypass this expensive step using the powerful motion vectors of AV1 encoded videos. We present an analysis of the quality of motion vectors extracted from AV1 encoded video streams. We finally present our full AV1-based Structure from Motion pipeline, re-using motion vectors from AV1 bitstreams to generate correspondences for 3D reconstruction, yielding dense inter-frame matches at a near-zero additional cost. Our method reduces front-end time by a median of 42% and yields up to 8× more 3D points with a median reprojection error of 0.5 px making compressed-domain correspondences a practical route to faster SfM in resource-constrained or real-time settings.

Karl Shaffer

From Artifacts to Art: A Data-Driven Guide to Android Video Encoding

It's a familiar scenario for many Android developers: a video looks pristine in the camera app, but appears blocky and full of artifacts after being processed for sharing. The diversity of the Android hardware ecosystem presents unique challenges for achieving consistent, high-quality video encoding, where seemingly safe configurations can produce poor results on some devices. This session will dive deep into the most common pitfalls that affect video quality on Android, showing visual examples of their impact and explaining the underlying causes. This talk presents a data-driven approach, offering recommendations for configuring your video encoders that are backed by extensive video quality measurements from our in-house evaluation infrastructure. We'll also briefly touch on some helpful open-source tools, like Transformer and CodecDB Lite, that can aid in implementation. You'll leave this talk with a clear strategy and actionable resources to improve video quality in your Android applications.

Luke Curley

hang.live

MoQ: Not Another Tech Demo

Tired of the yearly MoQ presentation? It's apparently the FUTURE of live media but it's apparently hasn't progressed past tech demos of the funny bunny. Me too. That's why I quit my cushy job and vibe coded a real conferencing app in real production. The guts are completely open source with both Rust and Typescript libraries. This talk will cover how I'm using WebTransport, WebCodecs, WebGPU, WebAudio for native web support, and most importantly, NOT using WebRTC. I'll also cover the relay architecture, as multiple servers can be clustered together to create a global CDN for the best QoS (in theory). Finally, I'll cover a few things that are MUCH easier with MoQ, such as running models in the browser on pixel data with WebGPU. Enough with the tech demos and standards debates. Let's build!

Oli Lisher

Matt McClure

Demuxed

11 years of Demuxed websites and swag

Matt and Oli give an insider history of the many amazing revisions of the Demuxed website, the t-shirts, the posters, and of course those broken mugs.

Mattias Buelens

Dolby Laboratories

VHS for the streaming era: record and replay for HLS

It’s a quiet day at the office, you’re reviewing your colleague’s latest pull request, when all of a sudden a notification pops up. A new Jira ticket greets you: “This stream is stalling on my machine.” But when you play the stream on your end, everything seems to work just fine. Now what? Is it because of an ad break that only happens once an hour? Is there something wrong with their firewall or ISP? Are you even looking at the same stream, or are you hitting an entirely different CDN? We’ve built a tool to record a part of a live HLS stream, and then replay it later on exactly as recorded. Customers can use the tool on their end until they reproduce the issue once, and then send that recording to our engineers to investigate. This way, these intermittent failures that are difficult to reproduce become fully deterministic and reproduce consistently. This makes debugging much easier and faster, since our engineers no longer have to wait until the problem occurs (no more xkcd #303 situations). In this talk, I’ll show how we’ve built the tool and how we’ve used it to debug issues with our customers’ streams. I’ll also show how we’ve integrated it in our end-to-end tests, so we can prevent regressions even for these tougher issues.

Nicolas Levy

Qualabs

Rise of the Planet of CMCD v2: Building the next-gen player data interface with Open-Source tools

So, CMCD v2 is officially a "thing." The spec is done (or it will be, once we stop finding new ways to break it). The party's over, right? Wrong. The real party is just getting started. We’re here to tell you that CMCD v2 isn’t just an incremental improvement; it’s a generic player-server interface that enables an explosion of new use cases—a new era we call the Rise of the Planet of CMCD v2. This year, we didn't just write the spec; we put it to the test while the ink was still wet. We implemented the CMCD v2 into the Common Media library, dash.js, and Shaka Player, and created the cmcd-toolkit—a fully open-source data collector and analyzer. Our mission was clear: create the very first CMCD v2 deployment in production to analyze the results for the “Ultra-Low Latency Live Streaming at Scale” IBC Accelerator project. In this presentation, we'll share the lessons: how this real-world deployment helped us refine the spec, and the insights we gained from measuring a live L3D-DASH implementation and how you can use the cmcd-toolkit today. But the story doesn't end there… In the process, we had a profound realization: CMCD v2 together with CMSD can be seen as a generic interface between player and server. This unlocks complex use cases far beyond QoE. We're talking about Content Steering, remote player debugging, and even watch parties. Suddenly, CMCD v2 became a game-changer for a whole new set of applications. In the Rise of the Planet of CMCD, the apes win—and this community couldn't be happier. This talk is for the builders, the tinkerers, and everyone who wants to join the player data revolution. ----- Disclaimer: Nico bears no relation to, and has no prior affiliation with, any of the monkeys previously presented. Furthermore, he wishes to state for the record his profound lack of interest in the content of any conversations the 'contemplative monkeys' may have had during their dating period. Any resemblance is purely coincidental.

Nigel Megitt

BBC

Which @*!#ing Timed Text format should I use?

The world of Timed Text is esoteric, detailed, full of annoying abbreviations, and full of wildly differing opinions. I'll be giving mine! But also, describing the current state of the most common formats, and some practical and usable advice about which format to adopt for which purpose, and where things are heading in the standards world. If time allows, I will attempt to bring some humour to the topic, as well as a select few nuggets of technical complexity to keep the audience away from their IDE for at least a few minutes.

Oli Lisher

Matt McClure

Demuxed

11 years of Demuxed websites and swag

Matt and Oli give an insider history of the many amazing revisions of the Demuxed website, the t-shirts, the posters, and of course those broken mugs.

David Springall

Yospace

Olivier Cortambert

Yospace

Another talk about ads, but this time what if we talked about the actual ads??

In 2019, the Interactive Advertising Bureau (IAB) released the Secure Interactive Media Interface Definition (SIMID). This format allows Publishers/Advertisers to have a player display an Ad on top or alongside their stream. Two years later, Apple engineers released HLS Interstitial and that’s how Server Guided Ad Insertion (SGAI) was born. Fast forward to 2025, DASH is getting support for SGAI and SGAI is trending. And one of the reasons it is trending is that it allows players to “easily” display new ad formats - in the form of an ad on top of or alongside the stream. Now, we are seeing demos with streams having fancy ads that fade in/out, play alongside content in the corner or take an L-shape part of the screen. But no one is telling you how you sell this space, how you signal a side-by-side ad, what type of media formats the ads need to be etc… Actually, it’s not that no-one talks about it, it’s that no-one in the streaming industry talks about it, but the ad folks (ie. the IAB) are actually working on it. And, *spoiler alert*, they talk about SIMID not SGAI. What if we (the streaming tech world) actually talked to our customers (the ad ecosystem)? What if we actually focused on what really matters: better ads for a better viewer experience? In this talk, we will go through the different cognate words that make it hard for us to understand ad people. We will of course have a couple of words from our marketing friends who are great at finding words that make everything look 100x better. Can you guess what linear means for an ad guy? Or, what’s the difference between an interactive and an immersive ad? (hint: it’s not Virtual Reality!). And what about live rewind ads - how cool do they actually sound in the ad world? We will also review the different standards bodies: on one side, the IAB (for the ads); on the other, Apple, SVTA, CTA-WAVE (for the streaming side). Have you ever met an ad person at one of your Streaming Tech Talks? I don’t mean someone that talks about SSAI or SGAI, but someone that talks about inventory, supply, and demand. Have you ever thought about fill-rates, CPMs, and what affects those metrics? We will look at what we can do to help the ad ecosystem maximise every advertising opportunity, so that generating more revenue does not have to mean more ads on more parts of the screen every time. Finally, we will talk about the trendy ad formats that are floating around and all the challenges they bring (but from an ad tech side, not a streaming tech side). This is going to be the type of talk where we all have an opinion and we all know the challenges (SGAI adoption, device capabilities, player complexity). But it’s time to get out of our bubble and think of the challenges that the ad ecosystem faces and how we can help. How do we avoid showing a cropped ad or worse cropping the most important of the original stream? May our shared love for XML (VAST/DASH) will lead us to a higher fill rate!

Pedro Tavanez

Touchstream

CMSD and the Temple of Doom: Escaping VQA's Legacy Traps

So there you are, staring at your VQA dashboard at 2 AM, wondering why monitoring video quality feels like being the unlucky archaeologist who always picks the wrong lever. You know the scene, everything's going fine until suddenly you're dodging massive boulders and questioning your life choices. Here's the thing about traditional VQA: it's basically a temple full of booby traps that someone convinced us to call "best practices." - The Boulder of Compute Costs that chases you down every monthly budget meeting - The Pit of Proprietary Hardware where good intentions and healthy bank accounts go to die - The Snake Pit of Integration Hell where your data sources turn on each other - The Spike Wall of Scale that nobody mentions until you're already impaled We've all been there. You implement some fancy VQA solution, everything looks great in the demo, and then production hits you like a 50-ton stone ball rolling downhill. Worse yet, when things go wrong, you're stuck waiting 15-30 minutes to even know there's a problem, and then another hour trying to figure out if it's encoding, delivery, or just your metrics lying to you again. But here's where it gets interesting. What if I told you there's actually a secret passage through this whole mess? CMSD-MQA isn't some shiny new vendor promise, it's more like finding that hidden lever that actually does what it's supposed to do. We took this dusty old standard that everyone talked about but nobody really used and turned it into something that cuts our failure detection time from 20+ minutes down to under 2 minutes. I know, I was skeptical too. In this lightning-fast 10-minute adventure, we'll cover: - The VQA death traps: why your current setup probably takes 15-30 minutes to tell you something's broken (spoiler: by then, social media already knows) - The CMSD-MQA escape route: how we reduced mean time to detection by 90% and started catching encoding failures within seconds instead of waiting for viewer complaints - The treasure: real metrics that matter and that let you pinpoint exactly which CDN edge is having a bad day, with a quick demo of segment-level quality data in action - Battle-tested implementation tips: the key gotchas we hit and how to avoid them (because 10 minutes means we're cutting straight to the good stuff) This talk is for: Operations engineers tired of playing Temple Run with their monitoring stack, streaming architects who want to escape the VQA vendor trap maze, and anyone who's ever felt like they need an archaeology degree just to understand why their quality metrics are lying to them. What treasure you'll claim: a practical map for implementing CMSD-MQA that actually leads to the exit, complete with real-world deployment strategies that work outside of conference demo dungeons. Plus proof that sometimes the ancient standards (when properly excavated) contain the solutions to modern problems. No video engineers were fed to crocodiles during the making of this implementation.

Prachi Sharma

JioHotstar

When Millions Tune In: Server-Guided Ad Insertion for Live Sports Streaming

Delivering targeted ads in live sports streaming is not just an adtech challenge - it’s a streaming engineering problem. At JioHotstar, we serve billions of views across live events, handling concurrency spikes that rank among the largest globally. This talk will explore how we built and scaled our custom Server-Guided Ad Insertion (SGAI) system for live sports, where ad breaks are unpredictable in both timing and duration. We’ll discuss why we moved beyond traditional SSAI, and how we engineered: * Manifest manipulation and segment stitching on the fly * Millisecond-level ad decisioning to insert ads without disrupting playback * Strategies for handling massive concurrency surges during peak moments of live matches. We’ll also share real-world lessons from operating at this scale - where streaming architecture and monetisation must work hand-in-hand. Whether you’re building for ads, scale, or live video, this session will provide practical insights into designing systems that balance playback quality with revenue performance.

Rafal Leszko

Livepeer

Realtime Video AI with Diffusion Models

Generative AI is opening new possibilities for creating and transforming video in real time. In this talk, I’ll explore how recent models such as StreamDiffusion and LongLive push diffusion techniques into practical use for low-latency video generation and transformation. I’ll give a deep technical walkthrough of how these systems can be adapted for streaming use cases, unpacking the full pipeline - from decoding, through the diffusion process, to encoding - and highlighting optimisation strategies, such as KV caching, that make interactive generation possible. I’ll also discuss the tradeoffs between ultra-low latency video transformation and generating longer, more coherent streams. To make it concrete, I’ll present demos of StreamDiffusion (served with the open-source cloud service Daydream) and LongLive (explored with the open-source research tool Scope), showcasing practical examples of both video-to-video transformation and streaming text-to-video generation.

Ryan Bickhart

Amazon IVS

Synchronized horizontal and vertical video without compromises

Video consumption on mobile has exploded over the last few years. Now creators have diverse audiences tuning in from all kinds of devices including phones, PCs, and even TVs. Previously, some portion of the audience would suffer with content that didn’t really fit their screen orientation or device usage, but no longer! We’ve developed a solution built on client side encoding and multitrack ERTMP so broadcasters can contribute synchronized horizontal and vertical content — and we’re not just talking about simply ripping a vertical slice from the main horizontal content. Creators can thoughtfully design their content and layout for both formats to reach viewers anywhere. In this talk we’ll show how broadcasters can contribute dual format content and how we deliver it to viewers, including new HLS multivariant playlist functionality we designed to overcome standards limitations that got in the way of a synchronized, adaptive playback experience.

Sam Bhattacharyya

Katana

Why I ditched cloud transcoding and set up a mac mini render farm

There are a number of use cases where you need to tightly integrate video processing with some form of AI processing on the video, like AI Upscaling, real-time lip syncing or gaze-correction, or virtual backgrounds. The killer bottleneck for these tasks is almost always CPU <> GPU communication, making them impractical or expensive for real time applications. The normal approach is setting up a fully GPU pipeline, using GPU video decoder, building your AI processing directly with CUDA (and potentially using custom CUDA kernals) instead of abtracted libraries like Pytorch - it's doable, we did this at Streamyard, but it's very hard to do and high maintenance. I stumbled upon a ridiculous sounding, but actually plausible alternative - writing neural networks in WebGPU, and then using WebCodecs to decode/encode frames, and use WebGPU to directly access and manipulate frame data. When a pipeline like this is run on an Apple M4 chip, it can run AI video processing loads 4-5x faster than an equivalent pipeline on a GPU-enabled instance on a cloud provider, and it's also ~5x cheaper to rent an M4 than an entry-level GPU. I've done a number of tests, and my conclusion is that this is efficient is because the M4 chip (1) has optimizations for encoding in it's hardware, (2) has GPU <> CPU communication much more tightly integrated than a typical GPU + cloud vm setup. All of these technologies are relatively new - the M4 chip, WebGPU, WebCodecs, but those three things together I think present viable alternative AI video processing pipeline compared to traditional processing. Using browser based encoding makes it also much easier to integrate 'simpler' manipulations, like adding 'banners' in live streams, and it's also much easier to integrate with WebRTC workflows. I have a tendency to write my own WebGPU neural networks, but TensorflowJS has improved in the last few years to accommodate importing video-frames from GPU. There are certainly downsides, like working with specialty Mac cloud providers, but it might actually be practical and easier to maintain (at least on the software side).

Steve Heffernan

Mux

No More Player: Reinventing Video.js for the Next 15 Years (Sorry, Plugins!)

After 15 years, Video.js still works great—if you pretend React, Svelte, and Tailwind don’t exist. Turns out frameworks are popular, developers want stunning defaults (ahem, Plyr), and Web Components aren’t quite the React miracle we once claimed (my bad). Video.js v10 is our dramatic, fresh-slate rebuild designed for today’s web—idiomatic, performant, and beautiful by default. Inspired by modern UI development and our own painful-yet-insightful journeys, we're breaking out of the black box of "player" to introduce: * Un-styled UI primitives (a la BaseUI, Radix) for React and HTML, enabling deep customization with any CSS (Tailwind, Modules, Scoped) * A core of platform-agnostic state management, supporting idiomatic patterns (like React hooks) in every environment, with our sights on React Native * A new streaming engine *framework*, building on the Common Media Library effort to cut down the file sizes of HLS/DASH players, even dynamically based on the current content But this isn't just the next version of Video.js with the usual crew of amazing contributors. It’s also the spiritual successor to MediaChrome, Vidstack, ReactPlayer, and Plyr, in collaboration with those player creators/communities, representing billions of player loads monthly. It's exactly like Avengers starring Big Buck Bunny. Bold? Definitely. Risky? Probably. A migration headache? Absolutely (sorry again, plugins!). But if we're aiming for another 15 years, it’s time we rethink what "player" even means. Spoiler: one size doesn't fit all anymore. Join us to glimpse the future, meet the new collaboration behind Video.js v10, and most importantly—tell us everything we got wrong before it ships.

Ted Meyer

A default HLS player for Chrome (and why I hate the robustness principle)

I'm a chromium engineer who's responsible for launching HLS playback natively in the chromium project (and thus the Google Chrome browser). The talk I plan to give starts by including a brief overview of the history of HLS in browsers, including how we got to the point of launching it in Chrome. Next up is an explanation of how this new player works, and what advantages we can make use of by building it into the browser vs shipping packaged javascript. I haven't yet decided how technical I will get when talking about the chromium pipeline/demuxer/decoder architecture or how MSE works "under the hood". The current version of the talk is intended for the chrome media team, so it covers that stuff, but I haven't yet decided if that's the right fit for the general Demuxed conference. I also plan on adding a section addressing the HLS library folks that isn't in the media-team version of the talk. I want to make it clear that we're not looking to replace those projects, and they will still have a lot of value to offer to site-owners from the perspective of control, telemetry, and spec-extensions. Finally, the talk covers some of the issues I faced when launching the new player, including some stupid bugs I caused including breaking a lot of the Korean web-radio market for an afternoon. I'll have to see exactly what metrics I'm allowed to include in this part of the talk, but I'll give a brief overview of some of the major issues I've cause/found/fixed along the way. Finally, I'll finish up talking about how the "robustness principle" of "Be conservative in what you send, be liberal in what you accept" caused a lot of delay launching the player, and a plea to the community to both produce more spec compliant manifests and for the players to be a bit less liberal in accepting wonky playlists. I think it would be a good talk for Demuxed because (at least from what I saw last year when I first attended), there aren't as many talks from the browser side of things, so it could help add to the breadth of subjects covered.

Thasso Griebel

Castlabs

Spec-Tacular Streaming: Lessons Learned in Standards Land

Every smooth playback, perfect seek, and crisp caption owes a quiet debt to specifications. Yet for most of us, “the spec” is just a PDF we skim when something breaks. I’ll open with the moment I stumbled into a working group, thinking I’d comment on one clause -- only to discover an entire ecosystem in which debates decide how billions of minutes reach our screens. We’ll rewind through a few watershed specs to see why some drafts gather dust while others redefine workflows, CDN footprints, and even viewer expectations. Along the way you’ll hear the unpolished bits: email threads measured in megabytes, terminology that morphs mid-meeting, and the small wins that make the slog worth it when a single clarifying sentence ends the debate. Finally, I’ll share low-friction entry points for newcomers: public GitHub issues labelled “good first spec,” mailing lists that welcome lurkers turned contributors, and realistic timelines so you don’t lose heart waiting for the next committee cycle. Whether you’re a video dev, player maintainer, or just the person who always spots typos, Standards Land has room for your voice -- and the streamers of tomorrow will thank you for it.

Zoe Liu

Visionular

Thomas Davies

Visionular

AI-Driven Real-Time Ball Tracking for Live Sports Streaming

When viewing sports events, audiences typically focus on specific Regions of Interest (ROIs), such as player faces, jersey numbers, or dynamic objects like the ball. Detecting and tracking these ROIs can enhance the user’s Quality of Experience (QoE). These ROIs frequently shift and change dynamically within a scene, making accurate realtime processing challenging. In Live Streaming manual intervention to track this motion may be impractical, and too expensive for many low-cost operations which frequently make use of locked off cameras. Some automatic solutions exist but rely on multi-camera operation. Addressing these limitations, we present an innovative AI-driven real-time ball detection and tracking solution specifically optimized for live-streamed sports events. In this talk we will describe the architecture and the core technology components. Our system employs advanced convolutional neural networks (CNNs), leveraging the efficiency and accuracy of combined YOLO-SORT models for detection and tracking. We integrate these components into an optimised GPU cloud-based architecture, enabling seamless real-time cropping and digital zoom without disruptive visual artifacts like abrupt camera movements. This intelligent content-aware solution significantly improves the QoE by automatically identifying and continuously tracking the ball, adapting smoothly to its movement in real time. Extensive real-world testing demonstrates our system's effectiveness across various sports scenarios, consistently achieving frame rates above 30 fps at 1920x1080 resolution on GPU-equipped cloud instances. Our approach not only reduces operational costs but also enhances viewer satisfaction by delivering a visually comfortable and engaging viewing experience. Future extensions of this work include real-time event detection, enabling further personalized and engaging sports viewing experiences.

Chaitanya Bandikatla

TikTok

Tomas Kvasnicka

CDN77

Improving Video Delivery and Streaming Performance with a "Media-First" Approach for CMAF

In our ongoing effort to enhance live streaming efficiency and viewer experience, we at TikTok partnered with CDN77 to explore a new approach called “Media First” This talk will examine how Media First transforms segment-based video delivery by merging multiple CMAF chunks into unified media objects and leveraging intelligent CDN edge logic. By combining media segments and applying dynamic URL rewriting at the edge, the CDN ensures that each viewer receives the freshest and most cacheable version of the video, even under real-time constraints. By shifting cache logic from “manifest-first” to “media-first,” we observed significant improvements in cache hit ratio, startup time, and stream stability. Specifically, we reduced origin fetches during peak events, improved playback startup predictability across diverse network conditions, and achieved a 2.5% reduction in first-frame delay and a 9% reduction in stalling. This talk highlights the architectural and operational collaboration between TikTok and CDN77 engineering teams, including lessons learned from edge compute deployments, manifest adaptation, and adaptive delivery strategies. Key Takeaways: * Media First Architecture: How merging segment groups and rethinking cache hierarchy enables higher media availability and CDN efficiency. * Edge URL Rewriting: How real-time request rewriting delivers the most current media while minimizing origin MISS traffic. * Performance Gains: Insights into how Media First improves QoE metrics, including startup latency, resolution stability, and playback resilience. * Cross-Team Innovation: Lessons from collaborative development between application logic and CDN infrastructure to advance live streaming capabilities. This talk will be co-presented by engineers from both companies and will include real-world production metrics and implementation details.

Vittorio Giovara

Vimeo

Making sense of all the new VR video formats

Do you believe there is a lot more to Video then generative AI or Ad Insertion? Are you interested in the new and shiny VR ecosystem? Are you confused by all the new video formats, making your head spin like a half-equirectangular 180º 90-fov video without a yaw/pitch/roll set? Well, you've come to the right talk! With a little bit of MV-HEVC magic, a particular projection algorithm, and special metadata, we'll be able to describe how Immersive video works, and how it differs from a classic Spatial video. I'll also go over all the innovations that are happening in the VR field, and introduce you to the plethora of new formats recently released, with a few tips and tricks on how to support them.

Zac Shenker

Fastly

Security Theater: Moving Beyond Performative Content Protection

We contractually implement DRM and forensic watermarking, but how effective are these solutions against modern piracy? This talk dissects common vulnerabilities, from Widevine L3 key extraction to the limitations of A/B segment watermarking against determined adversaries. We'll move beyond checkbox security and detail practical, alternative protection strategies. Topics include implementing short-lived, signed Common Access Tokens(CAT) for token authentication, building rapid revocation systems, and leveraging CDN access logs with player analytics to build real-time anomaly detection for credential sharing and illegal redistribution.

Zoe Liu

Visionular

Thomas Davies

Visionular

AI-Driven Real-Time Ball Tracking for Live Sports Streaming

When viewing sports events, audiences typically focus on specific Regions of Interest (ROIs), such as player faces, jersey numbers, or dynamic objects like the ball. Detecting and tracking these ROIs can enhance the user’s Quality of Experience (QoE). These ROIs frequently shift and change dynamically within a scene, making accurate realtime processing challenging. In Live Streaming manual intervention to track this motion may be impractical, and too expensive for many low-cost operations which frequently make use of locked off cameras. Some automatic solutions exist but rely on multi-camera operation. Addressing these limitations, we present an innovative AI-driven real-time ball detection and tracking solution specifically optimized for live-streamed sports events. In this talk we will describe the architecture and the core technology components. Our system employs advanced convolutional neural networks (CNNs), leveraging the efficiency and accuracy of combined YOLO-SORT models for detection and tracking. We integrate these components into an optimised GPU cloud-based architecture, enabling seamless real-time cropping and digital zoom without disruptive visual artifacts like abrupt camera movements. This intelligent content-aware solution significantly improves the QoE by automatically identifying and continuously tracking the ball, adapting smoothly to its movement in real time. Extensive real-world testing demonstrates our system's effectiveness across various sports scenarios, consistently achieving frame rates above 30 fps at 1920x1080 resolution on GPU-equipped cloud instances. Our approach not only reduces operational costs but also enhances viewer satisfaction by delivering a visually comfortable and engaging viewing experience. Future extensions of this work include real-time event detection, enabling further personalized and engaging sports viewing experiences.

The Schedule

09:30 GMT

Matt McClure

Demuxed

Opening Remarks

09:45 GMT

Jordi Cenzano

Meta

The media processing pipelines behind AI

In this talk we will be talking about the complex media processing pipelines behind media AI models (translations, lipsyncing, text to video, etc). AI Models are very picky on media specs (resolutions, frame rates, sample rates, colorspace, etc), also most of the times the AI tools that you see that, for instance, generates a fantastic and sharp video from a text prompt are really based on several AI models working in conjunction, each of them with their own constraints. Our team is responsible for ingesting 1 BILLION media assets DAYLY, and delivering 1TRILLION views every 24h, for that we use (highly optimized) typical media processing pipelines. In this talk we will explain how we leveraged all of that experience, and building blocks, and we added media AI inference as another offering of those pipelines, now you can upload an asset and deliver it with ABR encodings + CDN, and ALSO alter the content of that via AI (ex: add a hat to all the dogs in the scene). And all of that trying to NOT break the bank (GPU time is really expensive) We think this talk could be useful to reveal the hidden complexities of delivering AI, specially at scale

Read more

Sam Bhattacharyya

Katana

Why I ditched cloud transcoding and set up a mac mini render farm

There are a number of use cases where you need to tightly integrate video processing with some form of AI processing on the video, like AI Upscaling, real-time lip syncing or gaze-correction, or virtual backgrounds. The killer bottleneck for these tasks is almost always CPU <> GPU communication, making them impractical or expensive for real time applications. The normal approach is setting up a fully GPU pipeline, using GPU video decoder, building your AI processing directly with CUDA (and potentially using custom CUDA kernals) instead of abtracted libraries like Pytorch - it's doable, we did this at Streamyard, but it's very hard to do and high maintenance. I stumbled upon a ridiculous sounding, but actually plausible alternative - writing neural networks in WebGPU, and then using WebCodecs to decode/encode frames, and use WebGPU to directly access and manipulate frame data. When a pipeline like this is run on an Apple M4 chip, it can run AI video processing loads 4-5x faster than an equivalent pipeline on a GPU-enabled instance on a cloud provider, and it's also ~5x cheaper to rent an M4 than an entry-level GPU. I've done a number of tests, and my conclusion is that this is efficient is because the M4 chip (1) has optimizations for encoding in it's hardware, (2) has GPU <> CPU communication much more tightly integrated than a typical GPU + cloud vm setup. All of these technologies are relatively new - the M4 chip, WebGPU, WebCodecs, but those three things together I think present viable alternative AI video processing pipeline compared to traditional processing. Using browser based encoding makes it also much easier to integrate 'simpler' manipulations, like adding 'banners' in live streams, and it's also much easier to integrate with WebRTC workflows. I have a tendency to write my own WebGPU neural networks, but TensorflowJS has improved in the last few years to accommodate importing video-frames from GPU. There are certainly downsides, like working with specialty Mac cloud providers, but it might actually be practical and easier to maintain (at least on the software side).

Read more

10:30 GMT

Break

11:00 GMT

Prachi Sharma

JioHotstar

When Millions Tune In: Server-Guided Ad Insertion for Live Sports Streaming

Delivering targeted ads in live sports streaming is not just an adtech challenge - it’s a streaming engineering problem. At JioHotstar, we serve billions of views across live events, handling concurrency spikes that rank among the largest globally. This talk will explore how we built and scaled our custom Server-Guided Ad Insertion (SGAI) system for live sports, where ad breaks are unpredictable in both timing and duration. We’ll discuss why we moved beyond traditional SSAI, and how we engineered: * Manifest manipulation and segment stitching on the fly * Millisecond-level ad decisioning to insert ads without disrupting playback * Strategies for handling massive concurrency surges during peak moments of live matches. We’ll also share real-world lessons from operating at this scale - where streaming architecture and monetisation must work hand-in-hand. Whether you’re building for ads, scale, or live video, this session will provide practical insights into designing systems that balance playback quality with revenue performance.

Read more

Zac Shenker

Fastly

Security Theater: Moving Beyond Performative Content Protection

We contractually implement DRM and forensic watermarking, but how effective are these solutions against modern piracy? This talk dissects common vulnerabilities, from Widevine L3 key extraction to the limitations of A/B segment watermarking against determined adversaries. We'll move beyond checkbox security and detail practical, alternative protection strategies. Topics include implementing short-lived, signed Common Access Tokens(CAT) for token authentication, building rapid revocation systems, and leveraging CDN access logs with player analytics to build real-time anomaly detection for credential sharing and illegal redistribution.

Read more

Zoe Liu

Visionular

Thomas Davies

Visionular

AI-Driven Real-Time Ball Tracking for Live Sports Streaming

When viewing sports events, audiences typically focus on specific Regions of Interest (ROIs), such as player faces, jersey numbers, or dynamic objects like the ball. Detecting and tracking these ROIs can enhance the user’s Quality of Experience (QoE). These ROIs frequently shift and change dynamically within a scene, making accurate realtime processing challenging. In Live Streaming manual intervention to track this motion may be impractical, and too expensive for many low-cost operations which frequently make use of locked off cameras. Some automatic solutions exist but rely on multi-camera operation. Addressing these limitations, we present an innovative AI-driven real-time ball detection and tracking solution specifically optimized for live-streamed sports events. In this talk we will describe the architecture and the core technology components. Our system employs advanced convolutional neural networks (CNNs), leveraging the efficiency and accuracy of combined YOLO-SORT models for detection and tracking. We integrate these components into an optimised GPU cloud-based architecture, enabling seamless real-time cropping and digital zoom without disruptive visual artifacts like abrupt camera movements. This intelligent content-aware solution significantly improves the QoE by automatically identifying and continuously tracking the ball, adapting smoothly to its movement in real time. Extensive real-world testing demonstrates our system's effectiveness across various sports scenarios, consistently achieving frame rates above 30 fps at 1920x1080 resolution on GPU-equipped cloud instances. Our approach not only reduces operational costs but also enhances viewer satisfaction by delivering a visually comfortable and engaging viewing experience. Future extensions of this work include real-time event detection, enabling further personalized and engaging sports viewing experiences.

Read more

Nigel Megitt

BBC

Which @*!#ing Timed Text format should I use?

The world of Timed Text is esoteric, detailed, full of annoying abbreviations, and full of wildly differing opinions. I'll be giving mine! But also, describing the current state of the most common formats, and some practical and usable advice about which format to adopt for which purpose, and where things are heading in the standards world. If time allows, I will attempt to bring some humour to the topic, as well as a select few nuggets of technical complexity to keep the audience away from their IDE for at least a few minutes.

Read more

12:30 GMT

13:30 GMT

Christian Pillsbury

Mux

The Great Divorce: How Modern Streaming Broke Its Marriage with HTTP

For over a decade, HTTP Adaptive Streaming was the poster child for how standards should work together. HLS, DASH, and their predecessors succeeded precisely because they embraced HTTP's caching, took advantage of CDNs, and worked within browser security models. But here's the inconvenient truth: many of our newest streaming "innovations" are systematically breaking this symbiotic relationship. Multi-CDN content steering requires parameterizing URLs (for both media requests and content steering polling) and client/server contracts that inherently and literally remove browser cache from the equation. CMCD v2 abandons JSON sidecar payloads for either query parameters that cache-bust every request or custom headers that trigger double the network requests due to CORS preflight checks. Signed URLs with rotating tokens make browser caching impossible. We've become so focused on solving today's edge cases that we're undermining the fundamental advantages that made HTTP streaming successful in the first place. In this session, you'll discover how seemingly innocent streaming standards are creating a hidden performance tax of 2x network requests, cache invalidation, and resource waste. You'll learn why CORS preflight requests and RFC 9111 cache key requirements are putting streaming engineers in impossible positions, forcing them to choose between security and performance. Most importantly, you'll walk away with practical strategies using service workers and cache API to work around these issues client-side, plus concrete recommendations for how we can evolve both streaming and web standards to work together instead of against each other. Because the future of streaming isn't about bypassing the web—it's about making the web work better for streaming.

Read more

Ted Meyer

A default HLS player for Chrome (and why I hate the robustness principle)

I'm a chromium engineer who's responsible for launching HLS playback natively in the chromium project (and thus the Google Chrome browser). The talk I plan to give starts by including a brief overview of the history of HLS in browsers, including how we got to the point of launching it in Chrome. Next up is an explanation of how this new player works, and what advantages we can make use of by building it into the browser vs shipping packaged javascript. I haven't yet decided how technical I will get when talking about the chromium pipeline/demuxer/decoder architecture or how MSE works "under the hood". The current version of the talk is intended for the chrome media team, so it covers that stuff, but I haven't yet decided if that's the right fit for the general Demuxed conference. I also plan on adding a section addressing the HLS library folks that isn't in the media-team version of the talk. I want to make it clear that we're not looking to replace those projects, and they will still have a lot of value to offer to site-owners from the perspective of control, telemetry, and spec-extensions. Finally, the talk covers some of the issues I faced when launching the new player, including some stupid bugs I caused including breaking a lot of the Korean web-radio market for an afternoon. I'll have to see exactly what metrics I'm allowed to include in this part of the talk, but I'll give a brief overview of some of the major issues I've cause/found/fixed along the way. Finally, I'll finish up talking about how the "robustness principle" of "Be conservative in what you send, be liberal in what you accept" caused a lot of delay launching the player, and a plea to the community to both produce more spec compliant manifests and for the players to be a bit less liberal in accepting wonky playlists. I think it would be a good talk for Demuxed because (at least from what I saw last year when I first attended), there aren't as many talks from the browser side of things, so it could help add to the breadth of subjects covered.

Read more

David Springall

Yospace

Olivier Cortambert

Yospace

Another talk about ads, but this time what if we talked about the actual ads??

In 2019, the Interactive Advertising Bureau (IAB) released the Secure Interactive Media Interface Definition (SIMID). This format allows Publishers/Advertisers to have a player display an Ad on top or alongside their stream. Two years later, Apple engineers released HLS Interstitial and that’s how Server Guided Ad Insertion (SGAI) was born. Fast forward to 2025, DASH is getting support for SGAI and SGAI is trending. And one of the reasons it is trending is that it allows players to “easily” display new ad formats - in the form of an ad on top of or alongside the stream. Now, we are seeing demos with streams having fancy ads that fade in/out, play alongside content in the corner or take an L-shape part of the screen. But no one is telling you how you sell this space, how you signal a side-by-side ad, what type of media formats the ads need to be etc… Actually, it’s not that no-one talks about it, it’s that no-one in the streaming industry talks about it, but the ad folks (ie. the IAB) are actually working on it. And, *spoiler alert*, they talk about SIMID not SGAI. What if we (the streaming tech world) actually talked to our customers (the ad ecosystem)? What if we actually focused on what really matters: better ads for a better viewer experience? In this talk, we will go through the different cognate words that make it hard for us to understand ad people. We will of course have a couple of words from our marketing friends who are great at finding words that make everything look 100x better. Can you guess what linear means for an ad guy? Or, what’s the difference between an interactive and an immersive ad? (hint: it’s not Virtual Reality!). And what about live rewind ads - how cool do they actually sound in the ad world? We will also review the different standards bodies: on one side, the IAB (for the ads); on the other, Apple, SVTA, CTA-WAVE (for the streaming side). Have you ever met an ad person at one of your Streaming Tech Talks? I don’t mean someone that talks about SSAI or SGAI, but someone that talks about inventory, supply, and demand. Have you ever thought about fill-rates, CPMs, and what affects those metrics? We will look at what we can do to help the ad ecosystem maximise every advertising opportunity, so that generating more revenue does not have to mean more ads on more parts of the screen every time. Finally, we will talk about the trendy ad formats that are floating around and all the challenges they bring (but from an ad tech side, not a streaming tech side). This is going to be the type of talk where we all have an opinion and we all know the challenges (SGAI adoption, device capabilities, player complexity). But it’s time to get out of our bubble and think of the challenges that the ad ecosystem faces and how we can help. How do we avoid showing a cropped ad or worse cropping the most important of the original stream? May our shared love for XML (VAST/DASH) will lead us to a higher fill rate!

Read more

14:45 GMT

Break

15:15 GMT

Julien Zouein

Trinity College Dublin

Reconstructing 3D from Compressed Video: An AV1-Based SfM Pipeline

We present an AV1-based Structure from Motion Pipeline repurposing AV1 bitstreams metadata to generate correspondences for 3D reconstruction. The process of creating 3D models from 2D images, known as Structure from Motion (SfM), traditionally relies on finding and matching distinctive features (keypoints) across multiple images. This feature extraction and matching process is computationally intensive, requiring significant processing power and time. We can actually bypass this expensive step using the powerful motion vectors of AV1 encoded videos. We present an analysis of the quality of motion vectors extracted from AV1 encoded video streams. We finally present our full AV1-based Structure from Motion pipeline, re-using motion vectors from AV1 bitstreams to generate correspondences for 3D reconstruction, yielding dense inter-frame matches at a near-zero additional cost. Our method reduces front-end time by a median of 42% and yields up to 8× more 3D points with a median reprojection error of 0.5 px making compressed-domain correspondences a practical route to faster SfM in resource-constrained or real-time settings.

Read more

Chaitanya Bandikatla

TikTok

Tomas Kvasnicka

CDN77

Improving Video Delivery and Streaming Performance with a "Media-First" Approach for CMAF

In our ongoing effort to enhance live streaming efficiency and viewer experience, we at TikTok partnered with CDN77 to explore a new approach called “Media First” This talk will examine how Media First transforms segment-based video delivery by merging multiple CMAF chunks into unified media objects and leveraging intelligent CDN edge logic. By combining media segments and applying dynamic URL rewriting at the edge, the CDN ensures that each viewer receives the freshest and most cacheable version of the video, even under real-time constraints. By shifting cache logic from “manifest-first” to “media-first,” we observed significant improvements in cache hit ratio, startup time, and stream stability. Specifically, we reduced origin fetches during peak events, improved playback startup predictability across diverse network conditions, and achieved a 2.5% reduction in first-frame delay and a 9% reduction in stalling. This talk highlights the architectural and operational collaboration between TikTok and CDN77 engineering teams, including lessons learned from edge compute deployments, manifest adaptation, and adaptive delivery strategies. Key Takeaways: * Media First Architecture: How merging segment groups and rethinking cache hierarchy enables higher media availability and CDN efficiency. * Edge URL Rewriting: How real-time request rewriting delivers the most current media while minimizing origin MISS traffic. * Performance Gains: Insights into how Media First improves QoE metrics, including startup latency, resolution stability, and playback resilience. * Cross-Team Innovation: Lessons from collaborative development between application logic and CDN infrastructure to advance live streaming capabilities. This talk will be co-presented by engineers from both companies and will include real-world production metrics and implementation details.

Read more

Joanna White

British Film Institute National Archive

FFmpeg's starring role at the BFI National Archive

Ever wondered what happens when 100-year old celluloid cinema film meets digital workflows? Come and hear about the BFI National Archive, where FFmpeg is more than just a tool—it's a vital force in safeguarding Britain's audiovisual heritage. Witness how we seamlessly compress multi-terabyte film frame image scans into preservation video formats, and discover how open source libraries power our National Television Archive's live off-air recording system, capturing an impressive 1TB of British ‘telly’ per day. We’ll look at how vintage hardware digitises broadcast videotape collections, feeding them into automated slicing and transcoding pipelines, with FFmpeg at the heart of our open-source toolkit. This essential technology keeps cultural heritage alive and accessible across the UK through platforms like the BFI Player, UK libraries, and the BFI's Mediatheque. Archives internationally have a growing network of developers working on solutions to challenges like format obsolescence by developing and encoding to open standards like FFV1 and Matroska! With high volumes of digitised and born-digital content flowing into global archives daily, the BFI National Archive embrace the spirit of open-source by sharing our codebase, enabling other archives to learn from our practices. As the Developer who builds many of these workflows, I’m eager to share the code that drives our transcoding processes. I’m passionate about showcasing our work at conferences and celebrating the contributions of open-source developers, like attendees at Demuxed.

Read more

16:15 GMT

Short Break

16:30 GMT

Jonathan Lott

AudioShake

Using AI Audio Separation to Transform A/V Workflows

In video production, audio often plays a supporting role, yet its quality profoundly impacts viewer engagement, distribution, accessibility, and content compliance. Traditional workflows struggle with mixed audio tracks, bleed, and ambient noise, making tasks like isolating and boosting dialogue, removing background noise, or extracting music for rights management cumbersome. This session introduces how AI-powered audio separation can transform A/V workflows. By deconstructing complex audio into distinct components—dialogue, music, effects, and ambient sounds—creators can: - Enhance clarity by isolating and boosting dialogue - Remove or replace music to ensure copyright compliance - Reduce background noise for cleaner sound - Create immersive environments through spatial audio design We'll discuss the technical challenges of source separation, share insights on training models to distinguish audio elements in noisy environments, and demonstrate real-world applications that improve accessibility, localization, enhancement, immersive content, copyright compliance, and content personalization. This talk may be of interest to video devs working in environments such as broadcast, XR, UGC, streaming, post-production, or content creation (traditional or AI).

Read more

Mattias Buelens

Dolby Laboratories

VHS for the streaming era: record and replay for HLS

It’s a quiet day at the office, you’re reviewing your colleague’s latest pull request, when all of a sudden a notification pops up. A new Jira ticket greets you: “This stream is stalling on my machine.” But when you play the stream on your end, everything seems to work just fine. Now what? Is it because of an ad break that only happens once an hour? Is there something wrong with their firewall or ISP? Are you even looking at the same stream, or are you hitting an entirely different CDN? We’ve built a tool to record a part of a live HLS stream, and then replay it later on exactly as recorded. Customers can use the tool on their end until they reproduce the issue once, and then send that recording to our engineers to investigate. This way, these intermittent failures that are difficult to reproduce become fully deterministic and reproduce consistently. This makes debugging much easier and faster, since our engineers no longer have to wait until the problem occurs (no more xkcd #303 situations). In this talk, I’ll show how we’ve built the tool and how we’ve used it to debug issues with our customers’ streams. I’ll also show how we’ve integrated it in our end-to-end tests, so we can prevent regressions even for these tougher issues.

Read more

Vittorio Giovara

Vimeo

Making sense of all the new VR video formats

Do you believe there is a lot more to Video then generative AI or Ad Insertion? Are you interested in the new and shiny VR ecosystem? Are you confused by all the new video formats, making your head spin like a half-equirectangular 180º 90-fov video without a yaw/pitch/roll set? Well, you've come to the right talk! With a little bit of MV-HEVC magic, a particular projection algorithm, and special metadata, we'll be able to describe how Immersive video works, and how it differs from a classic Spatial video. I'll also go over all the innovations that are happening in the VR field, and introduce you to the plethora of new formats recently released, with a few tips and tricks on how to support them.

Read more

17:45 GMT

Matt McClure

Demuxed

Closing Remarks

09:30 GMT

Matt McClure

Demuxed

Opening Remarks

09:35 GMT

Hadi Amirpour

University of Klagenfurt

Your Player is Smarter Than You Think: Self-Learning in Adaptive Streaming